How FFNs Work

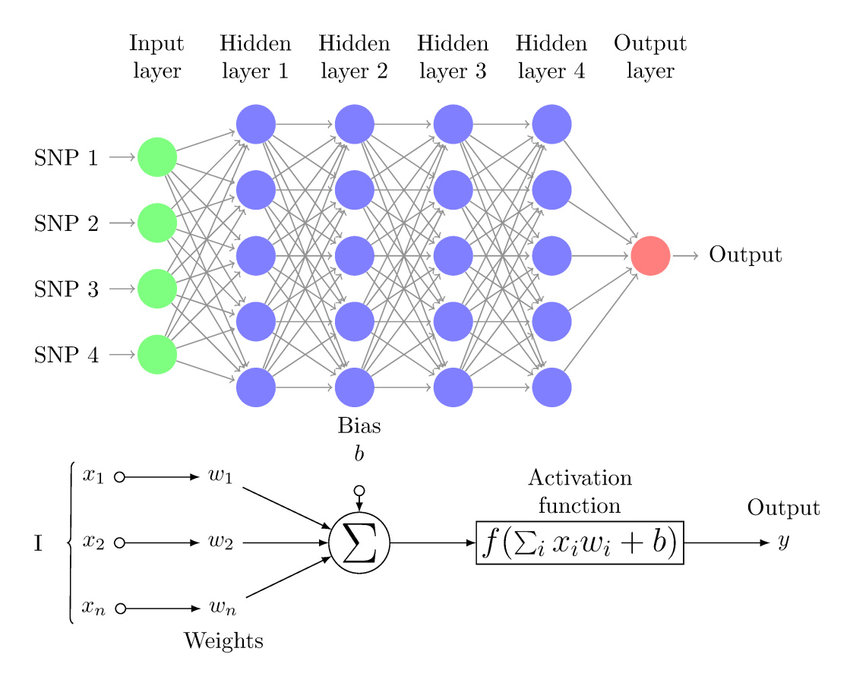

- Input Layer: Accepts input features (e.g., SNPs or other data points) for processing.

- Hidden Layers: - Multiple layers of interconnected nodes that transform the input. - Each node applies weights, biases, and an activation function to the inputs.

- Weights and Bias: Each connection between neurons has a weight, and each neuron has a bias to modify the weighted sum of inputs.

- Activation Function: Introduces non-linearity, enabling the network to learn complex patterns. Common examples include Sigmoid and ReLU.

- Forward Propagation: Data flows in one direction—from the input layer, through hidden layers, to the output layer.

- Output Layer: Produces the final result, such as a classification or prediction, based on the processed input.

- Applications: Widely used in tasks like image classification, regression, and time-series prediction.

FFN Code Example

Here's how we can define the layers of an FFN:

import tensorflow as tf

from tensorflow.keras import layers

model = tf.keras.Sequential([

layers.Input(shape=(784,)), # Input Layer (Flattened input for FFN)

layers.Dense(128, activation="relu"), # Hidden Layer 1

layers.Dense(64, activation="relu"), # Hidden Layer 2

layers.Dense(10, activation="softmax") # Output Layer

])